Introduction

Many organizations are realizing the benefits of migrating from Oracle to the cost-effective and powerful open-source PostgreSQL database. EDB Postgres Advanced Server (EPAS), with its Oracle compatibility features, makes this transition remarkably smooth. However, large-scale migrations still demand careful planning and the right tools.

In this blog post, we’ll outline a comprehensive migration strategy that leverages the power of DevOps practices with GitHub at its core, along with specialized EDB tools to streamline the process.

1. Initial Schema Conversion Using EDB Migration Toolkit and Migration Portal

The foundation of this approach lies in EDB’s Migration Toolkit and Migration Portal. Let’s outline the workflow:

- Assessment and Identification: The Migration Portal or Migration Toolkit analyzes your Oracle schema, highlighting potential incompatibilities and the need for manual adjustments.

- Schema Breakdown: Break your large schema into manageable SQL scripts (e.g., Tables.sql, Packages.sql, Procedures.sql, etc.) for focused conversion.

- Version Control Setup: Initialize a GitHub repository and store the initial Oracle schema in the ‘master’ branch for historical reference.

- Branch Creation for Conversion: Create separate branches from the ‘master’ to work on the conversion scripts generated by the Migration Portal. This modularizes your changes.

2. GitHub for Managing Ongoing Schema Changes

As your migration progresses, GitHub becomes your central hub for schema management:

- Branching for Isolation: Continue creating branches for specific schema modifications. This keeps your main development environment stable while changes are being tested and refined.

- Collaboration and Tracking: GitHub’s version control ensures seamless collaboration and a transparent history of all schema adjustments.

3. Automated Issue Detection and functional testing with GitHub Actions and SPL Check

Let’s introduce automation for error detection and quality control:

- GitHub Actions: Set up GitHub Actions to trigger automated checks whenever code is pushed to the repository.

- SPL Check: Integrate the EPAS ‘SPL Check extension’ into a GitHub Action to analyze SQL and PL/SQL code for Oracle compatibility issues. (See the provided code example below)

4. Creating Releases for Compatibility Assessment with EDB Migration Portal

To provide additional checkpoints in the migration process, let’s leverage the power of GitHub releases:

- Milestone Releases: As significant portions of the schema are converted, create releases within your GitHub repository.

- Compatibility Reassessment: Upload each release to the EDB Migration Portal. It will re-analyze the converted schema and provide a compatibility score against EDB Postgres Advanced Server. This helps track progress and proactively address issues.

5. Data Validation Using GitHub Actions and Containers

To ensure your converted procedures and packages function as expected, extend your GitHub Actions:

- Containerized Testing: Actions can spin up Docker containers with EPAS and sample data. These containers become isolated testing environments to validate database functionality.

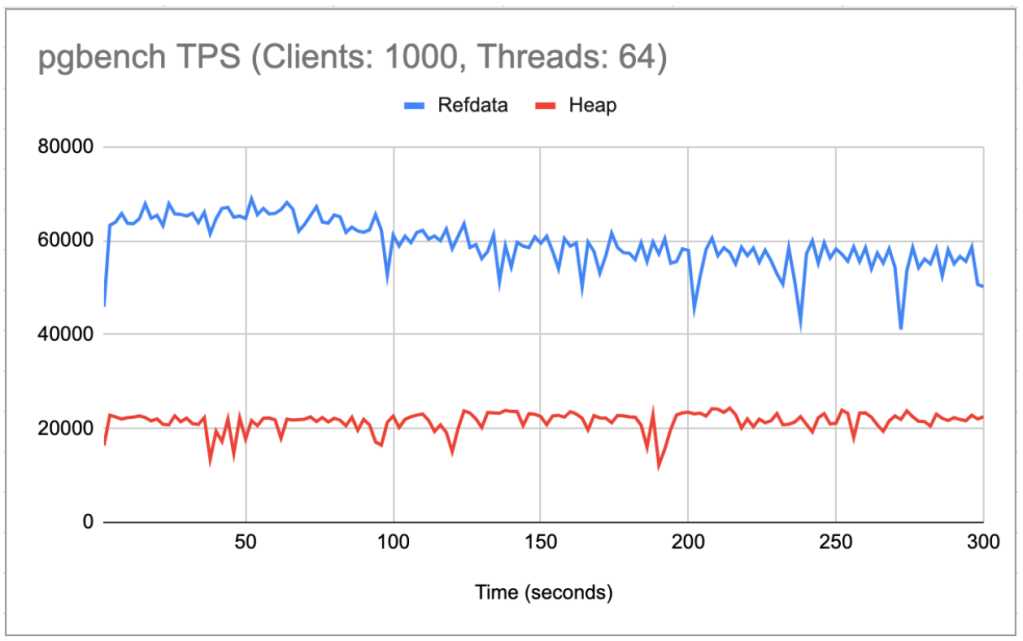

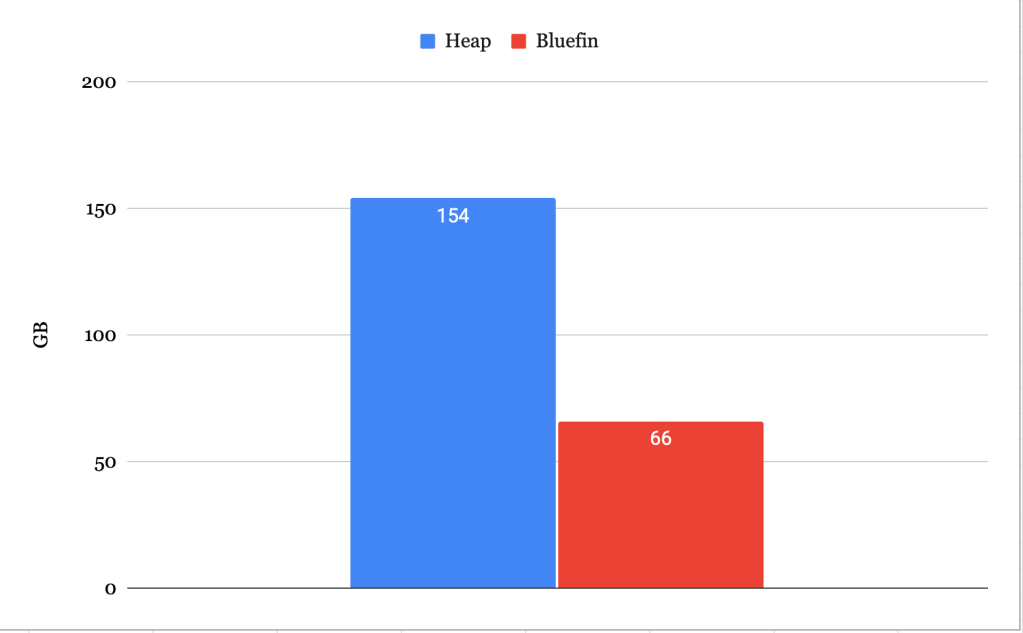

6. Performance Tuning with CICD Pipeline and DBMS_PROFILER

Don’t overlook performance! Here’s how to focus on efficiency:

- CICD Integration: The EPAS ‘DBMS_PROFILER’ tool can be incorporated into your continuous integration and deployment (CICD) pipeline to gather performance data and pinpoint bottlenecks in the migrated database.

Adapting the Framework using ora2pg and plpgsql_check

The principles and workflow outlined in this blog post can be readily adapted if you’re using the popular ora2pg tool for your Oracle to Postgres migration. Here’s how the key steps would translate:

- Initial Conversion with ora2pg: Use ora2pg to perform the initial schema and data conversion from Oracle to Postgres.

- Compatibility Checks with plpgsql_check: Install the ‘plpgsql_check’ Postgres extension. This extension provides functions and analysis tools to validate the compatibility and correctness of your PL/pgSQL code specifically. Integrate it into your GitHub Actions alongside ora2pg output for automated checks.

- GitHub for Management and Automation: The core benefits of using GitHub for version control, branching, and GitHub Actions for automation remain fully applicable when using ora2pg.

7. Production Rollout and Switchover

After successful schema conversion, performance optimization, and thorough testing, it’s time to carefully orchestrate the production rollout:

- Production Environment Setup: Establish a production-ready Postgres environment adhering to best practices for architecture, backup/recovery strategies, and observability/monitoring tools.

- Change Data Capture (CDC): Implement a CDC solution (such as the EDB Replication Server) to continuously synchronize data from Oracle to your new Postgres database. This minimizes downtime during the cutover.

- Application Switchover: Once data migration is complete, switch your application to point to the Postgres database.

- Reverse Replication: Set up replication from Postgres back to Oracle. This acts as a safeguard in case unexpected issues arise after the switchover.

- Monitoring and Decommissioning: Closely monitor the new Postgres environment for a few weeks. If all goes smoothly, you can decommission the legacy Oracle database with confidence.

Important Note: This migration framework focuses primarily on the database aspect. Developers and DBAs should integrate application conversion, functional testing, and QA processes in parallel, ideally before the performance benchmark phase.

Conclusion

This comprehensive migration strategy, built upon DevOps principles and powerful tools, offers a reliable and efficient path for migrating from Oracle to Postgres. By emphasizing automation, collaboration, regular compatibility checks, an iterative refinement process, and a careful production rollout plan, you’ll streamline the migration and ensure a successful transition.

Stay tuned for future blog posts where we’ll dive into the implementation details of setting up GitHub Actions, utilizing extensions, and designing effective migration flow processes.

If you are reading this, please comment if you have followed this practice or if you have followed any other practices. Please comment and share your thoughts on which method had been best for your migration journey from Oracle to Postgres. Your insights and experiences will be valuable to the community!